Although the promising Java value types are not imminent, I still enjoy nosing around occasionally in the OpenJDK valhalla-dev mailing list to see how things appear to be progressing and to get an idea of what is to come. Admittedly, there are some discussions that are beyond my understanding given my limited exposure to the terms used and the low-level specifics of some of these messages. However, there are occasionally messages and threads that I understand well what is being written and find interesting. A recent example of this is the "Empty value type ?" thread.

Rémi Forax starts the thread by asking "Is empty value type targeted for LW1?" The example error message included with that question shows a LinkageError and ClassFormatError with the message "Value Types do not support zero instance size yet". The response to this question from Tobias Hartmann answers, "No, empty value types are not planned to be supported for LW1."

Before moving onto the rest of the thread [which is the part that interested me the most], I'll quickly discuss "LW1." In a message on that same OpenJDK mailing list called "[lworld] LW1 - 'Minimal LWorld'", David Simms states, "we are approaching something 'usable' in terms of 'minimal L World' (LW1)" and "we will be moving of prototyping to milestone stabilization." That same message states that the "label" is "lw1" and the affected-version and fixed-version are both "repo-valhalla". In other words, "LW1" is the label used to track bugs and issues related to work on the "minimal L world" implementation. You can reference John Rose's 19 November 2017 message "abandon all U-types, welcome to L-world (or, what I learned in Burlington)" for an introduction to the "L World" term and what it means in terms of value types.

Returning to the "Empty value type?" thread, Kirk Pepperdine asked a question that also occurred to me, "How can a value type be empty?" He added, "What is an empty integer? An empty string?" He said he was "just curious" and now I was too. Here is a summary of the informative responses:

- Rémi Forax: "type [that] represents the absence of value like unit, void or bottom"

- Rémi Forax: "type that represents the result of a throw"

- Rémi Forax: "type that allows

HashSet<E>to be defined asHashMap<E,Empty>"- Brian Goetz's message elaborates on the value of this: "Zero-length values can be quite useful, just not directly. Look at the current implementations of Set that delegate to

HashMap; all that wasted space. When we have specialized generics, they can specialize toHashMap<T, empty>, and that space gets squeezed away to zero."

- Brian Goetz's message elaborates on the value of this: "Zero-length values can be quite useful, just not directly. Look at the current implementations of Set that delegate to

- Rémi Forax: "transformative type like a marker type that separate arguments" (see message for example of this one)

I also liked the final (as of this writing) Kirk Pepperdine message on that thread where he summarizes, "Feels like a value type version of null."

Incidentally, there are some other interesting messages and threads in the June 2018 Archives of the valhalla-dev mailing list. Here are some of them:

- Karen Kinnears posted the "Valhalla VM notes Wed Jun 6"

- I don't understand every detail documented here, but it is interesting to see some of the potential timeframes associated with potential features.

- Rémi Forax posted "Integer vs IntBox benchmark"

- Demonstrates results for

IntBox(Forax describes this as "a value type that stores an int") contrasted withintandIntegerand the results forIntBoxare on par withint(and significantly better thanInteger). - A link to the benchmark test on GitHub is also provided.

- Demonstrates results for

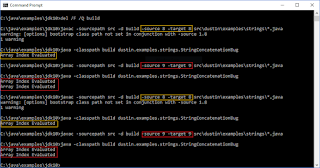

- Sergey Kuksenko posted "Valhalla LWorld microbencmarks"

- This message does not provide benchmark results, but instead explains that the "initial set of Valhalla LWorld microbenchmarks" have been placed "in the valhalla repository under

test/benchmarksdirectory." It also explains how to build them.

- This message does not provide benchmark results, but instead explains that the "initial set of Valhalla LWorld microbenchmarks" have been placed "in the valhalla repository under

- Mandy Chung posted "Library support for generating BSM for value type's hashCode/equals/toString"

- Described as "initial library support to generate BSM for

hashCode/equals/toStringfor value types" based on John Rose's "Value type hash code." - "BSM" is "bootstrap method"; see the article "Invokedynamic - Java’s Secret Weapon" for additional overview details.

- Described as "initial library support to generate BSM for

- John Rose posted "constant pool futures"

I look forward to hopefully one day being able to apply value types in my everyday Java code. Until then, it is interesting to think of what might be and also to see how much work is going into making it so.