Most new Java developers quickly learn that they should generally compare Java Strings using

String.equals(Object) rather than using

==. This is emphasized and reinforced to new developers repeatedly because they

almost always mean to compare String content (the actual characters forming the String) rather than the String's identity (its address in memory). I contend that we should reinforce the notion that

== can be used instead of

Enum.equals(Object). I provide my reasoning for this assertion in the remainder of this post.

There are four reasons that I believe using

== to compare Java enums is

almost always preferable to using the "equals" method:

- The

== on enums provides the same expected comparison (content) as equals

- The

== on enums is arguably more readable (less verbose) than equals

- The

== on enums is more null-safe than equals

- The

== on enums provides compile-time (static) checking rather than runtime checking

The second reason listed above ("arguably more readable") is obviously a matter of opinion, but that part about "less verbose" can be agreed upon. The first reason I generally prefer

== when comparing enums is a consequence of how the

Java Language Specification describes enums.

Section 8.9 ("Enums") states:

It is a compile-time error to attempt to explicitly instantiate an enum type. The final clone method in Enum ensures that enum constants can never be cloned, and the special treatment by the serialization mechanism ensures that duplicate instances are never created as a result of deserialization. Reflective instantiation of enum types is prohibited. Together, these four things ensure that no instances of an enum type exist beyond those defined by the enum constants.

Because there is only one instance of each enum constant, it is permissible to use the == operator in place of the equals method when comparing two object references if it is known that at least one of them refers to an enum constant. (The equals method in Enum is a final method that merely invokes super.equals on its argument and returns the result, thus performing an identity comparison.)

The excerpt from the specification shown above implies and then explicitly states that it is safe to use the

== operator to compare two enums because there is no way that there can be more than one instance of the same enum constant.

The fourth advantage to

== over

.equals when comparing enums has to do with compile-time safety. The use of

== forces a stricter compile time check than that for

.equals because

Object.equals(Object) must, by contract, take an arbitrary

Object. When using a statically typed language such as Java, I believe in taking advantage of the advantages of this static typing as much as possible. Otherwise, I'd use a

dynamically typed language. I believe that one of the recurring themes of

Effective Java is just that: prefer static type checking whenever possible.

For example, suppose I had a custom enum called

Fruit and I tried to compare it to the class

java.awt.Color. Using the

== operator allows me to get a compile-time error (including advance notice in my favorite Java IDE) of the problem. Here is a code listing that tries to compare a custom enum to a JDK class using the

== operator:

/**

* Indicate if provided Color is a watermelon.

*

* This method's implementation is commented out to avoid a compiler error

* that legitimately disallows == to compare two objects that are not and

* cannot be the same thing ever.

*

* @param candidateColor Color that will never be a watermelon.

* @return Should never be true.

*/

public boolean isColorWatermelon(java.awt.Color candidateColor)

{

// This comparison of Fruit to Color will lead to compiler error:

// error: incomparable types: Fruit and Color

return Fruit.WATERMELON == candidateColor;

}

The compiler error is shown in the screen snapshot that comes next.

Although I'm no fan of errors, I prefer them to be caught statically at compile time rather than depending on runtime coverage. Had I used the

equals method for this comparison, the code would have compiled fine, but the method would always return

false false because there is no way a

dustin.examples.Fruit enum will be equal to a

java.awt.Color class. I don't recommend it, but here is the comparison method using

.equals:

/**

* Indicate whether provided Color is a Raspberry. This is utter nonsense

* because a Color can never be equal to a Fruit, but the compiler allows this

* check and only a runtime determination can indicate that they are not

* equal even though they can never be equal. This is how NOT to do things.

*

* @param candidateColor Color that will never be a raspberry.

* @return {@code false}. Always.

*/

public boolean isColorRaspberry(java.awt.Color candidateColor)

{

//

// DON'T DO THIS: Waste of effort and misleading code!!!!!!!!

//

return Fruit.RASPBERRY.equals(candidateColor);

}

The "nice" thing about the above is the lack of compile-time errors. It compiles beautifully. Unfortunately, this is paid for with a potentially high price.

The final advantage I listed of using

== rather than

Enum.equals when comparing enums is the avoidance of the dreaded

NullPointerException. As I stated in

Effective Java NullPointerException Handling, I generally like to avoid unexpected

NullPointerExceptions. There is a limited set of situations in which I truly want the existence of a null to be treated as an exceptional case, but often I prefer a more graceful reporting of a problem. An advantage of comparing enums with

== is that a null can be compared to a non-null enum without encountering a

NullPointerException (NPE). The result of this comparison, obviously, is

false.

One way to avoid the NPE when using

.equals(Object) is to invoke the

equals method against an enum constant or a known non-null enum and then pass the potential enum of questionable character (possibly null) as a parameter to the

equals method. This has

often been done for years in Java with Strings to avoid the NPE. However, with the

== operator, order of comparison does not matter. I like that.

I've made my arguments and now I move onto some code examples. The next listing is a realization of the hypothetical Fruit enum mentioned earlier.

Fruit.java

package dustin.examples;

public enum Fruit

{

APPLE,

BANANA,

BLACKBERRY,

BLUEBERRY,

CHERRY,

GRAPE,

KIWI,

MANGO,

ORANGE,

RASPBERRY,

STRAWBERRY,

TOMATO,

WATERMELON

}

The next code listing is a simple Java class that provides methods for detecting if a particular enum or object is a certain fruit. I'd normally put checks like these in the enum itself, but they work better in a separate class here for my illustrative and demonstrative purposes. This class includes the two methods shown earlier for comparing

Fruit to

Color with both

== and

equals. Of course, the method using

== to compare an enum to a class had to have that part commented out to compile properly.

EnumComparisonMain.java

package dustin.examples;

public class EnumComparisonMain

{

/**

* Indicate whether provided fruit is a watermelon ({@code true} or not

* ({@code false}).

*

* @param candidateFruit Fruit that may or may not be a watermelon; null is

* perfectly acceptable (bring it on!).

* @return {@code true} if provided fruit is watermelon; {@code false} if

* provided fruit is NOT a watermelon.

*/

public boolean isFruitWatermelon(Fruit candidateFruit)

{

return candidateFruit == Fruit.WATERMELON;

}

/**

* Indicate whether provided object is a Fruit.WATERMELON ({@code true}) or

* not ({@code false}).

*

* @param candidateObject Object that may or may not be a watermelon and may

* not even be a Fruit!

* @return {@code true} if provided object is a Fruit.WATERMELON;

* {@code false} if provided object is not Fruit.WATERMELON.

*/

public boolean isObjectWatermelon(Object candidateObject)

{

return candidateObject == Fruit.WATERMELON;

}

/**

* Indicate if provided Color is a watermelon.

*

* This method's implementation is commented out to avoid a compiler error

* that legitimately disallows == to compare two objects that are not and

* cannot be the same thing ever.

*

* @param candidateColor Color that will never be a watermelon.

* @return Should never be true.

*/

public boolean isColorWatermelon(java.awt.Color candidateColor)

{

// Had to comment out comparison of Fruit to Color to avoid compiler error:

// error: incomparable types: Fruit and Color

return /*Fruit.WATERMELON == candidateColor*/ false;

}

/**

* Indicate whether provided fruit is a strawberry ({@code true}) or not

* ({@code false}).

*

* @param candidateFruit Fruit that may or may not be a strawberry; null is

* perfectly acceptable (bring it on!).

* @return {@code true} if provided fruit is strawberry; {@code false} if

* provided fruit is NOT strawberry.

*/

public boolean isFruitStrawberry(Fruit candidateFruit)

{

return Fruit.STRAWBERRY == candidateFruit;

}

/**

* Indicate whether provided fruit is a raspberry ({@code true}) or not

* ({@code false}).

*

* @param candidateFruit Fruit that may or may not be a raspberry; null is

* completely and entirely unacceptable; please don't pass null, please,

* please, please.

* @return {@code true} if provided fruit is raspberry; {@code false} if

* provided fruit is NOT raspberry.

*/

public boolean isFruitRaspberry(Fruit candidateFruit)

{

return candidateFruit.equals(Fruit.RASPBERRY);

}

/**

* Indicate whether provided Object is a Fruit.RASPBERRY ({@code true}) or

* not ({@code false}).

*

* @param candidateObject Object that may or may not be a Raspberry and may

* or may not even be a Fruit!

* @return {@code true} if provided Object is a Fruit.RASPBERRY; {@code false}

* if it is not a Fruit or not a raspberry.

*/

public boolean isObjectRaspberry(Object candidateObject)

{

return candidateObject.equals(Fruit.RASPBERRY);

}

/**

* Indicate whether provided Color is a Raspberry. This is utter nonsense

* because a Color can never be equal to a Fruit, but the compiler allows this

* check and only a runtime determination can indicate that they are not

* equal even though they can never be equal. This is how NOT to do things.

*

* @param candidateColor Color that will never be a raspberry.

* @return {@code false}. Always.

*/

public boolean isColorRaspberry(java.awt.Color candidateColor)

{

//

// DON'T DO THIS: Waste of effort and misleading code!!!!!!!!

//

return Fruit.RASPBERRY.equals(candidateColor);

}

/**

* Indicate whether provided fruit is a grape ({@code true}) or not

* ({@code false}).

*

* @param candidateFruit Fruit that may or may not be a grape; null is

* perfectly acceptable (bring it on!).

* @return {@code true} if provided fruit is a grape; {@code false} if

* provided fruit is NOT a grape.

*/

public boolean isFruitGrape(Fruit candidateFruit)

{

return Fruit.GRAPE.equals(candidateFruit);

}

}

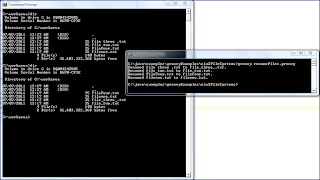

I decided to approach demonstration of the ideas captured in the above methods via unit tests. In particular, I make use of

Groovy's

GroovyTestCase. That class for using Groovy-powered unit testing is in the next code listing.

EnumComparisonTest.groovy

package dustin.examples

class EnumComparisonTest extends GroovyTestCase

{

private EnumComparisonMain instance

void setUp() {instance = new EnumComparisonMain()}

/**

* Demonstrate that while null is not watermelon, this check does not lead to

* NPE because watermelon check uses {@code ==} rather than {@code .equals}.

*/

void testIsWatermelonIsNotNull()

{

assertEquals("Null cannot be watermelon", false, instance.isFruitWatermelon(null))

}

/**

* Demonstrate that a passed-in Object that is really a disguised Fruit can

* be compared to the enum and found to be equal.

*/

void testIsWatermelonDisguisedAsObjectStillWatermelon()

{

assertEquals("Object should have been watermelon",

true, instance.isObjectWatermelon(Fruit.WATERMELON))

}

/**

* Demonstrate that a passed-in Object that is really a disguised Fruit can

* be compared to the enum and found to be not equal.

*/

void testIsOtherFruitDisguisedAsObjectNotWatermelon()

{

assertEquals("Fruit disguised as Object should NOT be watermelon",

false, instance.isObjectWatermelon(Fruit.ORANGE))

}

/**

* Demonstrate that a passed-in Object that is really a null can be compared

* without NPE to the enum and that they will be considered not equal.

*/

void testIsNullObjectNotEqualToWatermelonWithoutNPE()

{

assertEquals("Null, even as Object, is not equal to Watermelon",

false, instance.isObjectWatermelon(null))

}

/** Demonstrate that test works when provided fruit is indeed watermelon. */

void testIsWatermelonAWatermelon()

{

assertEquals("Watermelon expected for fruit", true, instance.isFruitWatermelon(Fruit.WATERMELON))

}

/** Demonstrate that fruit other than watermelon is not a watermelon. */

void testIsWatermelonBanana()

{

assertEquals("A banana is not a watermelon", false, instance.isFruitWatermelon(Fruit.BANANA))

}

/**

* Demonstrate that while null is not strawberry, this check does not lead to

* NPE because strawberry check uses {@code ==} rather than {@code .equals}.

*/

void testIsStrawberryIsNotNull()

{

assertEquals("Null cannot be strawberry", false, instance.isFruitStrawberry(null))

}

/**

* Demonstrate that raspberry case throws NPE because of attempt to invoke

* {@code .equals} method on null.

*/

void testIsFruitRaspberryThrowsNpeForNull()

{

shouldFail(NullPointerException)

{

instance.isFruitRaspberry(null)

}

}

/**

* Demonstrate that raspberry case throws NPE even for Object because of

* attempt to invoke {@code .equals} method on null.

*/

void testIsObjectRaspberryThrowsNpeForNull()

{

shouldFail(NullPointerException)

{

instance.isObjectRaspberry(null)

}

}

/**

* Demonstrate that {@code .equals} approach works for comparing enums even if

* {@code .equals} is invoked on passed-in object.

*/

void testIsRaspberryDisguisedAsObjectRaspberry()

{

assertEquals("Expected object to be raspberry",

true, instance.isObjectRaspberry(Fruit.RASPBERRY))

}

/**

* Demonstrate that while null is not grape, this check does not lead to NPE

* because grape check invokes {@code .equals} method on the enum constant

* being compared rather than on the provided {@code null}.

*/

void testIsGrapeIsNotNull()

{

assertEquals("Null cannot be grape", false, instance.isFruitGrape(null))

}

}

I don't describe what the various tests demonstrate much here because the comments on the tested class's methods and on the test methods cover most of that. In short, the variety of tests demonstrate that

== is null-safe, and that

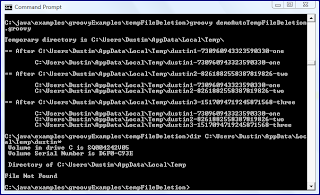

equals is not null safe unless invoked on an enum constant or known non-null enum. There are a few other tests to ensure that the comparisons work as we'd expect them to work. The output from running the unit tests is shown next.

The

GroovyTestCase method

shouldFail reported that an NPE was indeed thrown when code attempted to call

equals on an advertised enum that was really null. Because I used the overloaded version of this method that accepted the excepted exception as a parameter, I was able to ensure that it was an NPE that was thrown. The next screen snapshot shows what I would have seen if I had told the unit test that an

IllegalArgumentException was expected rather than a

NullPointerException.

I'm not the only one who thinks that

== is preferable to

equals when comparing enums. After I started writing this (because I saw what I deem to be a misuse of

equals for comparing two enums again today for the umpteeth time), I discovered other posts making the same argument. The post

== or equals with Java enum highlights the compile-time advantage of explicitly comparing two distinct types with

==: "Using == the compiler screams and tells us that we are comparing apples with oranges."

Ashutosh's post

Comparing Java Enums concludes that "using == over .equals() is more advantageous in two ways" (avoiding the NPE and getting the static compile-time check). The latter post also pointed me to the excellent reference

Comparing Java enum members: == or equals()?.

The Dissenting View

All three references just mentioned had dissenting opinions either in the thread (the last reference) or as comments to the blog post (the first two references). The primary arguments for comparing enums with

equals rather than

== is consistency with comparing of other reference types (non-primitives). There is also an argument that

equals is in a way more readable because a less experienced Java developer won't think it is wrong and he or she might think

== is a bad idea. My argument to that is that it is good to know the difference and could provide a valuable teaching experience. Another argument against

== is that

equals gets compiled into the

== form anyway. I don't see that so much as an argument against using

==. In fact, it really is a moot point in my mind if they are the same thing in byte code anyway. Furthermore, it doesn't take away from the advantages of

== in terms of null safety and static type checking.

Don't Forget !=

All of the above arguments apply to the question of

!= versus

!Enum.equals(Object), of course. The

Java Tutorials section

Getting and Setting Fields with Enum Types demonstrates comparing the same enum types and their example makes use of

!=.

Conclusion

There is very little in software development that can be stated unequivocally and without controversy. The use of

equals versus

== for comparing enums is no exception to this. However, I have no problem comparing primitives in Java (as long as I ensure they are true primitives and not unboxed references) with

== and likewise have no problem comparing enums with

== either. The specification explicitly spells out that this is permissible as does the

Java Tutorials (which uses

!= rather than

!Enum.equals). I actually go further than that and state that it is preferable. If I can avoid

NullPointerExceptions and still get the response I want (two compared things are not equal) and can move my error checking up to compile time all without extra effort, I'll take that deal just about every time.